Using a model from HuggingFace¶

- This frameworks supports training such models and storing results

In [ ]:

Copied!

# openml imports

import openml

import openml_pytorch as op

from openml_pytorch.callbacks import TestCallback

from openml_pytorch.metrics import accuracy

from openml_pytorch.trainer import convert_to_rgb

# pytorch imports

from torch.utils.tensorboard.writer import SummaryWriter

from torchvision.transforms import Compose, Resize, ToPILImage, ToTensor, Lambda

import torchvision

# other imports

import logging

import warnings

# set up logging

openml.config.logger.setLevel(logging.DEBUG)

op.config.logger.setLevel(logging.DEBUG)

warnings.simplefilter(action='ignore')

# openml imports

import openml

import openml_pytorch as op

from openml_pytorch.callbacks import TestCallback

from openml_pytorch.metrics import accuracy

from openml_pytorch.trainer import convert_to_rgb

# pytorch imports

from torch.utils.tensorboard.writer import SummaryWriter

from torchvision.transforms import Compose, Resize, ToPILImage, ToTensor, Lambda

import torchvision

# other imports

import logging

import warnings

# set up logging

openml.config.logger.setLevel(logging.DEBUG)

op.config.logger.setLevel(logging.DEBUG)

warnings.simplefilter(action='ignore')

Data¶

Define image transformations¶

In [ ]:

Copied!

transform = Compose(

[

ToPILImage(), # Convert tensor to PIL Image to ensure PIL Image operations can be applied.

Lambda(convert_to_rgb), # Convert PIL Image to RGB if it's not already.

Resize((64, 64)), # Resize the image.

ToTensor(), # Convert the PIL Image back to a tensor.

]

)

transform = Compose(

[

ToPILImage(), # Convert tensor to PIL Image to ensure PIL Image operations can be applied.

Lambda(convert_to_rgb), # Convert PIL Image to RGB if it's not already.

Resize((64, 64)), # Resize the image.

ToTensor(), # Convert the PIL Image back to a tensor.

]

)

Configure the Data Module and Choose a Task¶

- Make sure the data is present in the

file_dirdirectory, and thefilename_colis correctly set along with this column correctly pointing to where your data is stored.

In [ ]:

Copied!

data_module = op.OpenMLDataModule(

type_of_data="image",

file_dir="datasets",

filename_col="image_path",

target_mode="categorical",

target_column="label",

batch_size=64,

transform=transform,

)

# Download the OpenML task for tiniest imagenet

task = openml.tasks.get_task(363295)

data_module = op.OpenMLDataModule(

type_of_data="image",

file_dir="datasets",

filename_col="image_path",

target_mode="categorical",

target_column="label",

batch_size=64,

transform=transform,

)

# Download the OpenML task for tiniest imagenet

task = openml.tasks.get_task(363295)

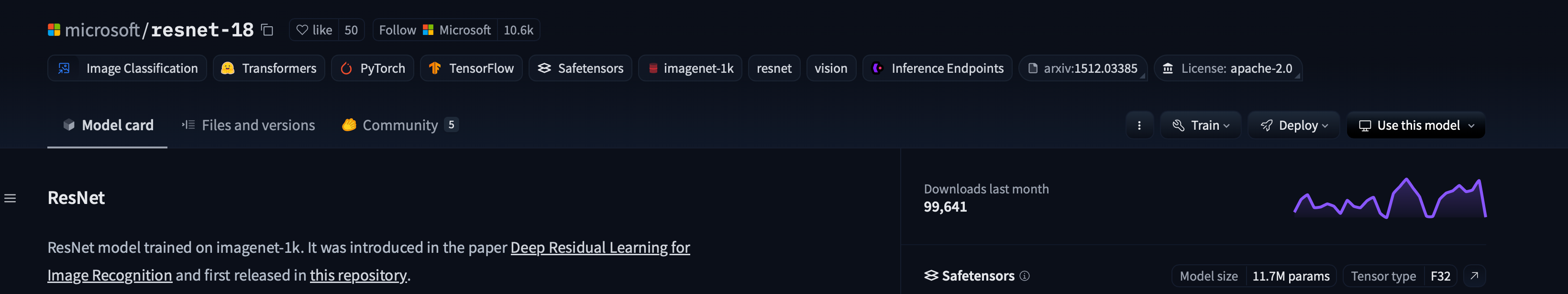

Model¶

- First you need to get the model you need

- Click use this model -> transformers -> get the code you need

- Then you need to modify the model to work with the number of classes in your dataset

- This is something you would need to do anyway if you were transfer learning on a dataset different from the one the model was trained on.

- You can use the

modelobject to access the model and modify the final layer to match the number of classes in your dataset. (note that this depends on the model you are using, and you might need to do this manually)- In general, try either

model.classifierormodel.fcormodel.classifier[-1]to access the final layer of the model. - If this doesnt work, try printing the model and looking at the architecture to find the correct layer to modify.

- HF provides a "num_labels" parameter but this does not always work as expected, so it is better to modify the final layer manually.

- In general, try either

In [ ]:

Copied!

import torch

from transformers import AutoImageProcessor, AutoModelForImageClassification

processor = AutoImageProcessor.from_pretrained("microsoft/resnet-18")

model_o = AutoModelForImageClassification.from_pretrained("microsoft/resnet-18")

class TransformerCompatibility(torch.nn.Module):

def __init__(self, model_from_pretrained, num_classes) -> None:

super(TransformerCompatibility, self).__init__()

self.model = model_from_pretrained

# self.model.classifier = torch.nn.Linear(self.model.classifier.in_features, num_classes)

self.model.classifier._modules['1'] = torch.nn.Linear(self.model.classifier._modules['1'].in_features, num_classes)

def forward(self, input):

# The ViT model expects the input to be of shape (batch_size, num_channels, height, width)

# Ensure the input is in the correct shape

if len(input.shape) == 3:

input = input.unsqueeze(0)

# Forward pass through the model

outputs = self.model(input)

# The output is a tuple, where the first element is the logits

logits = outputs.logits

return logits

model = TransformerCompatibility(model_o, num_classes=200)

import torch

from transformers import AutoImageProcessor, AutoModelForImageClassification

processor = AutoImageProcessor.from_pretrained("microsoft/resnet-18")

model_o = AutoModelForImageClassification.from_pretrained("microsoft/resnet-18")

class TransformerCompatibility(torch.nn.Module):

def __init__(self, model_from_pretrained, num_classes) -> None:

super(TransformerCompatibility, self).__init__()

self.model = model_from_pretrained

# self.model.classifier = torch.nn.Linear(self.model.classifier.in_features, num_classes)

self.model.classifier._modules['1'] = torch.nn.Linear(self.model.classifier._modules['1'].in_features, num_classes)

def forward(self, input):

# The ViT model expects the input to be of shape (batch_size, num_channels, height, width)

# Ensure the input is in the correct shape

if len(input.shape) == 3:

input = input.unsqueeze(0)

# Forward pass through the model

outputs = self.model(input)

# The output is a tuple, where the first element is the logits

logits = outputs.logits

return logits

model = TransformerCompatibility(model_o, num_classes=200)

Train your model on the data¶

- Note that by default, OpenML runs a 10 fold cross validation on the data. You cannot change this for now.

In [ ]:

Copied!

import torch

trainer = op.OpenMLTrainerModule(

experiment_name= "Tiny ImageNet",

data_module=data_module,

verbose=True,

epoch_count=1,

metrics= [accuracy],

# remove the TestCallback when you are done testing your pipeline. Having it here will make the pipeline run for a very short time.

callbacks=[

# TestCallback,

],

)

op.config.trainer = trainer

run = openml.runs.run_model_on_task(model, task, avoid_duplicate_runs=False)

import torch

trainer = op.OpenMLTrainerModule(

experiment_name= "Tiny ImageNet",

data_module=data_module,

verbose=True,

epoch_count=1,

metrics= [accuracy],

# remove the TestCallback when you are done testing your pipeline. Having it here will make the pipeline run for a very short time.

callbacks=[

# TestCallback,

],

)

op.config.trainer = trainer

run = openml.runs.run_model_on_task(model, task, avoid_duplicate_runs=False)

In [ ]:

Copied!

openml.config.apikey = ''

run = op.add_experiment_info_to_run(run=run, trainer=trainer)

run.publish()

openml.config.apikey = ''

run = op.add_experiment_info_to_run(run=run, trainer=trainer)

run.publish()