Note

Go to the end to download the full example code

Datasets¶

How to list and download datasets.

# License: BSD 3-Clauses

import openml

import pandas as pd

from openml.datasets import edit_dataset, fork_dataset, get_dataset

Exercise 0¶

List datasets

Use the output_format parameter to select output type

Default gives ‘dict’ (other option: ‘dataframe’, see below)

Note: list_datasets will return a pandas dataframe by default from 0.15. When using openml-python 0.14, list_datasets will warn you to use output_format=’dataframe’.

datalist = openml.datasets.list_datasets(output_format="dataframe")

datalist = datalist[["did", "name", "NumberOfInstances", "NumberOfFeatures", "NumberOfClasses"]]

print(f"First 10 of {len(datalist)} datasets...")

datalist.head(n=10)

# The same can be done with lesser lines of code

openml_df = openml.datasets.list_datasets(output_format="dataframe")

openml_df.head(n=10)

First 10 of 5301 datasets...

Exercise 1¶

Find datasets with more than 10000 examples.

Find a dataset called ‘eeg_eye_state’.

Find all datasets with more than 50 classes.

datalist[datalist.NumberOfInstances > 10000].sort_values(["NumberOfInstances"]).head(n=20)

""

datalist.query('name == "eeg-eye-state"')

""

datalist.query("NumberOfClasses > 50")

Download datasets¶

# This is done based on the dataset ID.

dataset = openml.datasets.get_dataset(1471)

# Print a summary

print(

f"This is dataset '{dataset.name}', the target feature is "

f"'{dataset.default_target_attribute}'"

)

print(f"URL: {dataset.url}")

print(dataset.description[:500])

/home/runner/work/openml-python/openml-python/openml/datasets/functions.py:437: FutureWarning: Starting from Version 0.15 `download_data`, `download_qualities`, and `download_features_meta_data` will all be ``False`` instead of ``True`` by default to enable lazy loading. To disable this message until version 0.15 explicitly set `download_data`, `download_qualities`, and `download_features_meta_data` to a bool while calling `get_dataset`.

warnings.warn(

This is dataset 'eeg-eye-state', the target feature is 'Class'

URL: https://api.openml.org/data/v1/download/1587924/eeg-eye-state.arff

**Author**: Oliver Roesler

**Source**: [UCI](https://archive.ics.uci.edu/ml/datasets/EEG+Eye+State), Baden-Wuerttemberg, Cooperative State University (DHBW), Stuttgart, Germany

**Please cite**: [UCI](https://archive.ics.uci.edu/ml/citation_policy.html)

All data is from one continuous EEG measurement with the Emotiv EEG Neuroheadset. The duration of the measurement was 117 seconds. The eye state was detected via a camera during the EEG measurement and added later manually to the file after

Get the actual data.

openml-python returns data as pandas dataframes (stored in the eeg variable below), and also some additional metadata that we don’t care about right now.

eeg, *_ = dataset.get_data()

You can optionally choose to have openml separate out a column from the dataset. In particular, many datasets for supervised problems have a set default_target_attribute which may help identify the target variable.

X, y, categorical_indicator, attribute_names = dataset.get_data(

target=dataset.default_target_attribute

)

print(X.head())

print(X.info())

V1 V2 V3 V4 ... V11 V12 V13 V14

0 4329.23 4009.23 4289.23 4148.21 ... 4211.28 4280.51 4635.90 4393.85

1 4324.62 4004.62 4293.85 4148.72 ... 4207.69 4279.49 4632.82 4384.10

2 4327.69 4006.67 4295.38 4156.41 ... 4206.67 4282.05 4628.72 4389.23

3 4328.72 4011.79 4296.41 4155.90 ... 4210.77 4287.69 4632.31 4396.41

4 4326.15 4011.79 4292.31 4151.28 ... 4212.82 4288.21 4632.82 4398.46

[5 rows x 14 columns]

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 14980 entries, 0 to 14979

Data columns (total 14 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 V1 14980 non-null float64

1 V2 14980 non-null float64

2 V3 14980 non-null float64

3 V4 14980 non-null float64

4 V5 14980 non-null float64

5 V6 14980 non-null float64

6 V7 14980 non-null float64

7 V8 14980 non-null float64

8 V9 14980 non-null float64

9 V10 14980 non-null float64

10 V11 14980 non-null float64

11 V12 14980 non-null float64

12 V13 14980 non-null float64

13 V14 14980 non-null float64

dtypes: float64(14)

memory usage: 1.6 MB

None

Sometimes you only need access to a dataset’s metadata. In those cases, you can download the dataset without downloading the data file. The dataset object can be used as normal. Whenever you use any functionality that requires the data, such as get_data, the data will be downloaded. Starting from 0.15, not downloading data will be the default behavior instead. The data will be downloading automatically when you try to access it through openml objects, e.g., using dataset.features.

dataset = openml.datasets.get_dataset(1471, download_data=False)

/home/runner/work/openml-python/openml-python/openml/datasets/functions.py:437: FutureWarning: Starting from Version 0.15 `download_data`, `download_qualities`, and `download_features_meta_data` will all be ``False`` instead of ``True`` by default to enable lazy loading. To disable this message until version 0.15 explicitly set `download_data`, `download_qualities`, and `download_features_meta_data` to a bool while calling `get_dataset`.

warnings.warn(

Exercise 2¶

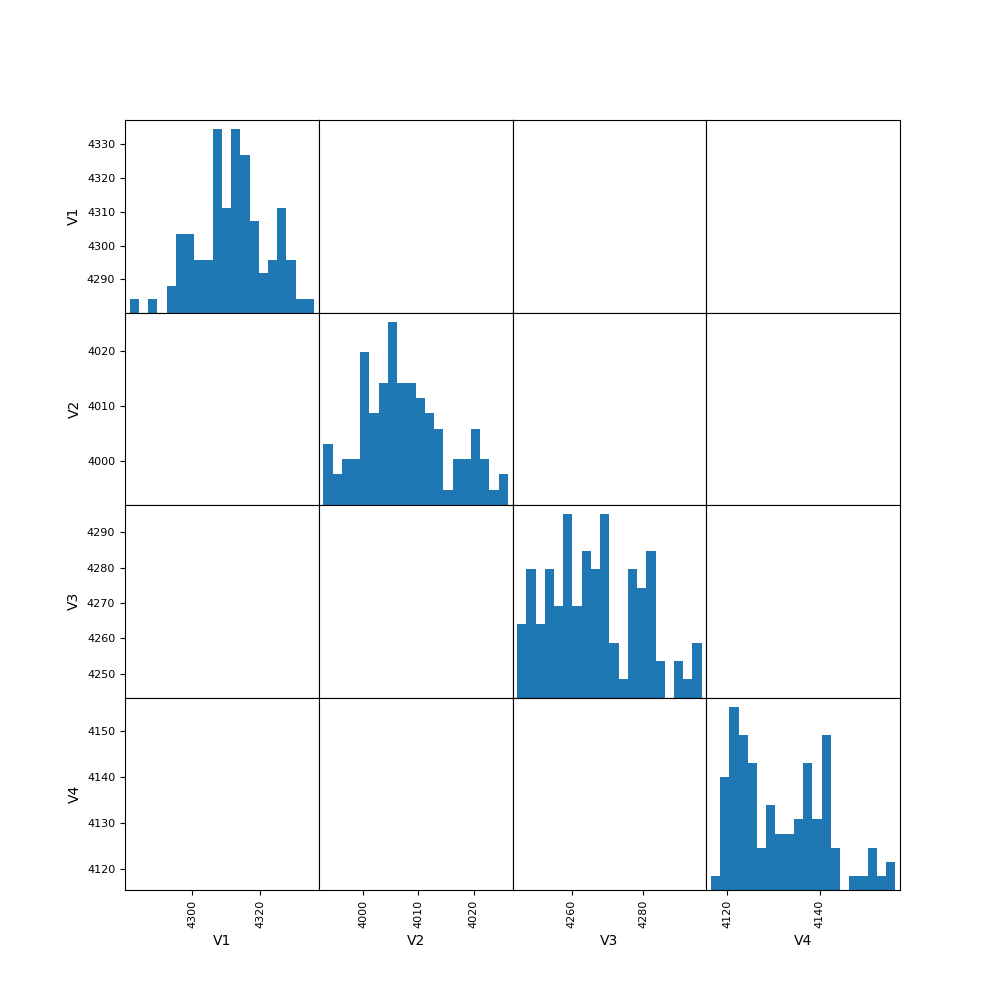

Explore the data visually.

eegs = eeg.sample(n=1000)

_ = pd.plotting.scatter_matrix(

X.iloc[:100, :4],

c=y[:100],

figsize=(10, 10),

marker="o",

hist_kwds={"bins": 20},

alpha=0.8,

cmap="plasma",

)

/opt/hostedtoolcache/Python/3.8.17/x64/lib/python3.8/site-packages/pandas/plotting/_matplotlib/misc.py:97: UserWarning: No data for colormapping provided via 'c'. Parameters 'cmap' will be ignored

ax.scatter(

Edit a created dataset¶

This example uses the test server, to avoid editing a dataset on the main server.

Warning

This example uploads data. For that reason, this example connects to the test server at test.openml.org. This prevents the main server from crowding with example datasets, tasks, runs, and so on. The use of this test server can affect behaviour and performance of the OpenML-Python API.

openml.config.start_using_configuration_for_example()

/home/runner/work/openml-python/openml-python/openml/config.py:184: UserWarning: Switching to the test server https://test.openml.org/api/v1/xml to not upload results to the live server. Using the test server may result in reduced performance of the API!

warnings.warn(

Edit non-critical fields, allowed for all authorized users: description, creator, contributor, collection_date, language, citation, original_data_url, paper_url

desc = (

"This data sets consists of 3 different types of irises' "

"(Setosa, Versicolour, and Virginica) petal and sepal length,"

" stored in a 150x4 numpy.ndarray"

)

did = 128

data_id = edit_dataset(

did,

description=desc,

creator="R.A.Fisher",

collection_date="1937",

citation="The use of multiple measurements in taxonomic problems",

language="English",

)

edited_dataset = get_dataset(data_id)

print(f"Edited dataset ID: {data_id}")

Edited dataset ID: 128

Editing critical fields (default_target_attribute, row_id_attribute, ignore_attribute) is allowed only for the dataset owner. Further, critical fields cannot be edited if the dataset has any tasks associated with it. To edit critical fields of a dataset (without tasks) owned by you, configure the API key: openml.config.apikey = ‘FILL_IN_OPENML_API_KEY’ This example here only shows a failure when trying to work on a dataset not owned by you:

try:

data_id = edit_dataset(1, default_target_attribute="shape")

except openml.exceptions.OpenMLServerException as e:

print(e)

https://test.openml.org/api/v1/xml/data/edit returned code 1065: Critical features default_target_attribute, row_id_attribute and ignore_attribute can be edited only by the owner. Fork the dataset if changes are required. - None

Fork dataset¶

Used to create a copy of the dataset with you as the owner. Use this API only if you are unable to edit the critical fields (default_target_attribute, ignore_attribute, row_id_attribute) of a dataset through the edit_dataset API. After the dataset is forked, you can edit the new version of the dataset using edit_dataset.

data_id = fork_dataset(1)

print(data_id)

data_id = edit_dataset(data_id, default_target_attribute="shape")

print(f"Forked dataset ID: {data_id}")

openml.config.stop_using_configuration_for_example()

2766

Forked dataset ID: 2766

Total running time of the script: ( 0 minutes 9.768 seconds)