AMLB

An AutoML Benchmark

Comparing different AutoML frameworks is notoriously challenging. AMLB

is an open and extensible benchmark that follows best practices and

avoids common mistakes when comparing AutoML frameworks.

Easy to Use

You can run an entire benchmark with a single command! The AutoML

benchmark tool automates the installation of the AutoML framework,

the experimental setup, and executing the experiment.

> python runbenchmark.py autosklearn openml/s/269 1h8c

Installation guide

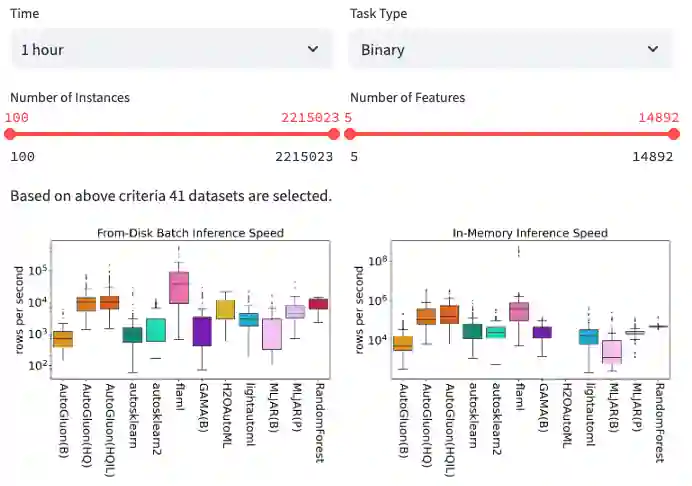

Visualize Results

The results can be visualized with our

interactive visualization tool

or one of our

notebooks. This includes critical difference diagrams,

scaled performance plots, and more!

Results

Results

Easy to Extend

Adding a framework

and

adding a dataset

is easy. These extensions can be kept completely private, or

shared with the community. For datasets, it is even possible to

work with

OpenML

tasks and suites directly!

Extending the benchmark

📄 Paper

Our

JMLR paper introduces the benchmark. It includes an in-depth discussion of the different design

decisions and its limitations, as well as a multi-faceted analysis

of results from large scale comparison across 9 frameworks on more

than 100 tasks conducted in 2023.

🧑💻 Code

The entire benchmark tool is open source and developed on

Github. The Github discussion board and issue trackers are the main way

for us to interact with the community.

AutoML Frameworks

Many AutoML frameworks are already integrated with the AutoML

benchmark tool and

adding more is easy.

We have more information about the different frameworks on our

framework overview page. The icons below

link directly to their respective Github repositories.

Community

We welcome any contributions to the AutoML benchmark. Our goal is to

provide the best benchmark tools for AutoML research and we can't do

that on our own. Contributions are appreciated in many forms,

including feedback on the benchmark design, feature requests, bug

reports, code and documentation contributions, and more. Why not stop

by on our

welcome board

and let us know what got you interested in the benchmark?